NIR for Code Generation

Neural Integration of Iterative Reasoning (NIR) in LLMs for Code Generation

Abstract

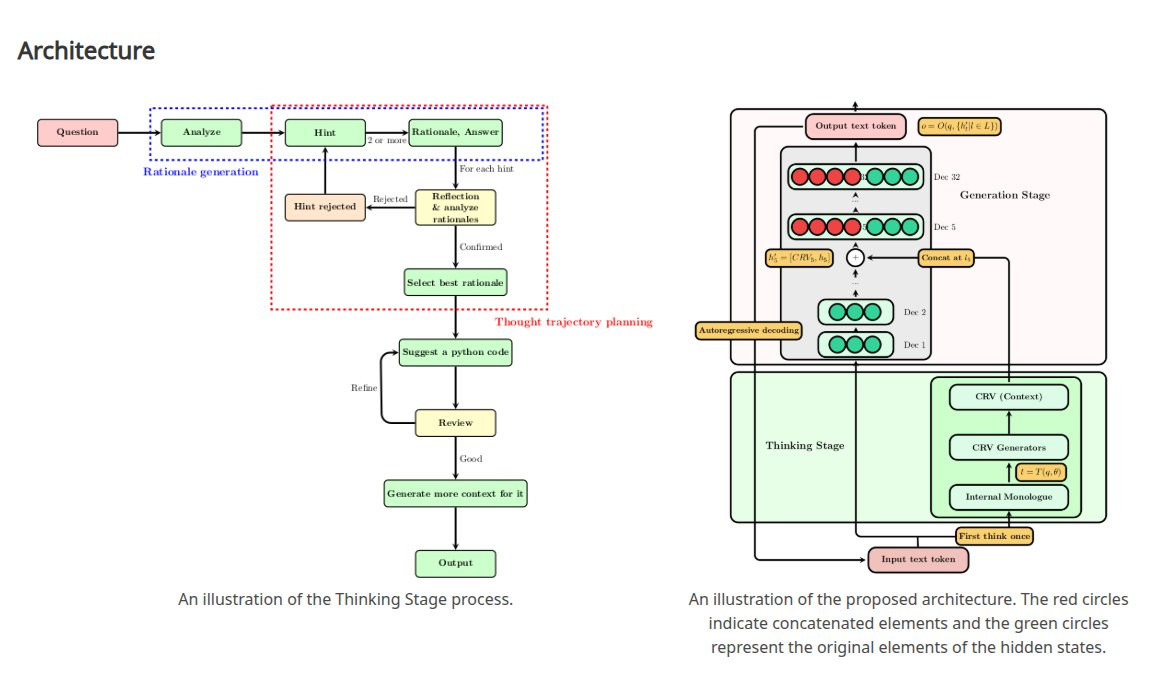

Despite advances in large language models (LLMs) for code generation, they still struggle in effectively utilizing contextual information throughout the generation process. To tackle this challenge, we introduce the Neural Integration of Iterative Reasoning (NIR) framework, which offers a new method for incorporating Context Representation Vectors (CRVs) at multiple levels within LLMs. NIR boosts the ability of these models to generate code without needing fine-tuning, allowing it to be used across various LLM architectures. We assess NIR by testing it with LLaMA 3.1 on the MBPP dataset, focusing on early, mid, and deep integration stages. Our experiments show that the depth of CRV integration has a notable impact on several facets of code generation, including response rates, syntactic correctness, and overall code structure. Deeper integration generally improves syntactic accuracy and code conciseness, while mid-layer integration shows optimal performance in semantic tasks. We report detailed evaluation metrics that assess code quality, complexity, and structure. Our findings indicate possible trade-offs among various code quality measures and emphasize the potential of adaptive integration strategies. While NIR demonstrates promising results, we also identify limitations such as dataset specificity and output inconsistencies. This study contributes to understanding contextual information processing in LLMs and might be useful for future developments in codeLLMs. We conclude by outlining future research directions, including multi-layer integration and dynamic adaptation strategies.

Highlights:

✅ It dynamically recalculates RoPE for modified hidden states and requires NO finetuning - a plug-and-play approach to enhance code generation capabilities!

✅ Using Meta’s LLaMA 3.1-8B-instruct as our testbed, we found multiple trade-offs in code characteristics depending on where integration happens.

✅ A key insight: LLMs encode simpler code features in early layers and more sophisticated patterns in later ones. -> This can be helpful for targeted manipulation

✅ The method is flexible - we can integrate arbitrary hidden states into arbitrary layers (though neighboring layers work best for model coherence). #Reasoning #MachineLearning #GenerativeAI

Interested in the full research? Please visit NIR website to explore methodology, results, and code implementation.

If you’d like to discuss this further, please don’t hesitate to reach out to me.

Please check out the following twitter threads 🧵 :

|

|

Collaboration

I’m interested in handling OOD problems and those mentioned on my website 🌐, if you like to collaborate, please reach out to me.