Hamiltonian Mechanics  ¶

¶

Hamiltonian mechanics is a way to describe how physical systems, like planets or pendulums, move over time, focusing on energy rather than just forces. By reframing complex dynamics through energy lenses, this 19th-century physics framework now powers cutting-edge generative AI. It uses generalized coordinates \( q \) (like position) and their conjugate momenta \( p \) (related to momentum), forming a phase space that captures the system's state. This approach is particularly useful for complex systems with many parts, making it easier to find patterns and conservation laws.

Mathematical Reformation: From Second-Order to Phase Flow  ¶

¶

Newton's \( F = m\ddot{q} \) requires solving second-order differential equations, which become unwieldy for constrained systems or when identifying conserved quantities.

The Core Idea

Hamiltonian mechanics splits \( \ddot{q} = F(q)/m \) into two first-order equations by introducing conjugate momentum \( p \):

It decomposes acceleration into complementary momentum/position flows. This phase space perspective reveals hidden geometric structure.

Lagrangian Prelude: Action Principles¶

The Lagrangian \( \mathcal{L}(q, \dot{q}) = K - U \) leads to Euler-Lagrange equations via variational calculus: $$ \frac{d}{dt}\left( \frac{\partial \mathcal{L}}{\partial \dot{q}} \right) - \frac{\partial \mathcal{L}}{\partial q} = 0 $$

Kinetic Energy Symbol

Note that the \( K \) in the \( \mathcal{L}(q, \dot{q}) = K - U \) is also represented as \( T \).

But these remain second-order. The critical leap comes through Legendre Transformation \( (\dot{q} \rightarrow p) \). The Hamiltonian is derived from the Lagrangian through a Legendre transformation by defining the conjugate momentum as \( p_i = \frac{\partial \mathcal{L}}{\partial \dot{q}_i} \); then the Hamiltonian can be written as: $$ H(q,p) = \sum_i p_i \dot{q}_i - \mathcal{L}(q, \dot{q}) $$

Annotated \( H(q,p) \)

We can write \( H(q,p) \) more intuitively as: $$ H(q,p) = K(p) + U(q) $$

Proof?

T is the Kinetic energy; for simplicity, I'll replace it with K.

The negative sign arises because we are subtracting the Lagrangian from the sum of the products of momenta and velocities. This ensures that the Hamiltonian represents the total energy of the system for conservative systems, where the Lagrangian is \( K - U \) and the Hamiltonian becomes \( K + U \).

For a simple system where \( K = \frac{1}{2}m\dot{q}^2 \) and \( U = U(q) \), the Hamiltonian would be:

- Kinetic Energy: \( K = \frac{1}{2}m\dot{q}^2 \)

- Potential Energy: \( U = U(q) \)

- Lagrangian: \( \mathcal{L} = \frac{1}{2}m\dot{q}^2 - U(q) \)

- Conjugate Momentum: \( p = m\dot{q} \)

- Hamiltonian: \( H = p\dot{q} - \mathcal{L} = p\frac{p}{m} - \left(\frac{1}{2}m\left(\frac{p}{m}\right)^2 - U(q)\right) = \frac{p^2}{m} - \frac{p^2}{2m} + U(q) = \frac{p^2}{2m} + U(q) = K(p) + U(q) \)

This flips the script: instead of \( \dot{q} \)-centric dynamics, we get symplectic phase flow.

Why This Matters

The Hamiltonian becomes the system's total energy \( H = K + U \) for many physical systems. It also provides a framework where time evolution is a canonical transformation - a symmetry preserving the fundamental Poisson bracket structure \( \{q_i, p_j\} = \delta_{ij} \).

Canonical and Non-Canonical Transformations

A canonical transformation is a change of variables that preserves the form of Hamilton's equations. It's like changing the map projection without altering the landscape.

Consider a simple translation: $$ Q = q + a, \quad P = p + b $$ This transformation preserves the Hamiltonian structure and Poisson bracket: \( \{Q, P\} = \{q + a, p + b\} = \{q, p\} = 1 = \delta_{ij} \)

- Preserves Hamiltonian structure.

- Maintains Poisson bracket invariance.

- Time evolution can be viewed as a canonical transformation.

On the other hand, non-canonical transformation changes the form of Hamilton's equations.

For example, consider the transformation:

The Poisson bracket is:

\( \{Q, P\} = \frac{\partial Q}{\partial q} \frac{\partial P}{\partial p} - \frac{\partial Q}{\partial p} \frac{\partial P}{\partial q} = 3q^2 \cdot 3p^2 - 0 = 9q^2p^2 \neq 1 \)

How to calculate those formula?

The Poisson bracket of two functions \( f \) and \( g \) is defined as: \( \{f, g\} = \sum_i \left( \frac{\partial f}{\partial q_i} \frac{\partial g}{\partial p_i} - \frac{\partial f}{\partial p_i} \frac{\partial g}{\partial q_i} \right) \)

Transformation 1: \( Q = q + a, \quad P = p + b \)

Partial Derivatives:

- \( \frac{\partial Q}{\partial q} = 1 \)

- \( \frac{\partial Q}{\partial p} = 0 \)

- \( \frac{\partial P}{\partial q} = 0 \)

- \( \frac{\partial P}{\partial p} = 1 \)

These derivatives can be represented in matrix form as: \( \frac{\partial (Q, P)}{\partial (q, p)} = \begin{pmatrix} 1 & 0 \\ 0 & 1 \end{pmatrix} \)

This is a diagonal identity matrix, indicating that the transformation preserves the original structure.

Poisson Bracket Calculation \( \{Q, P\} = \frac{\partial Q}{\partial q} \frac{\partial P}{\partial p} - \frac{\partial Q}{\partial p} \frac{\partial P}{\partial q} = (1)(1) - (0)(0) = 1 \)

Transformation 2: \( Q = q^3, \quad P = p^3 \)

Partial Derivatives - \( \frac{\partial Q}{\partial q} = 3q^2 \) - \( \frac{\partial Q}{\partial p} = 0 \) - \( \frac{\partial P}{\partial q} = 0 \) - \( \frac{\partial P}{\partial p} = 3p^2 \)

These derivatives can be represented as: \( \frac{\partial (Q, P)}{\partial (q, p)} = \begin{pmatrix} 3q^2 & 0 \\ 0 & 3p^2 \end{pmatrix} \)

This is a diagonal matrix but not the identity matrix, indicating that the transformation does not preserve the original structure.

Poisson Bracket Calculation \( \{Q, P\} = \frac{\partial Q}{\partial q} \frac{\partial P}{\partial p} - \frac{\partial Q}{\partial p} \frac{\partial P}{\partial q} = (3q^2)(3p^2) - (0)(0) = 9q^2p^2 \)

- Transformation 1 preserves the Poisson bracket structure because it results in a constant value of 1, represented by an identity matrix.

- Transformation 2 does not preserve the Poisson bracket structure because the result depends on \( q \) and \( p \), represented by a non-identity diagonal matrix.

This transformation is not canonical because it does not preserve the Poisson bracket structure.

Newton vs. Lagrange vs. Hamilton: A Philosophical Showdown¶

| Aspect | Newtonian | Lagrangian | Hamiltonian |

|---|---|---|---|

| State Variables | Position \( x \) and velocity \( \dot{x} \) | Generalized coordinates \( q \) and velocities \( \dot{q} \) | Generalized coordinates \( q \) and conjugate momenta \( p \) |

| Formulation | Second-order differential equations \( (F=ma) \) | Principle of least action (\( \delta \int L \, dt = 0 \)) | First-order differential equations from Hamiltonian function (Phase flow \( (dH) \)) |

| Identifying Symmetries | Manual identification or through specific methods | Noether's theorem | Canonical transformations and Poisson brackets |

| Machine Learning Connection | Physics-informed neural networks, simulations | Optimal control, reinforcement learning | Hamiltonian Monte Carlo (HMC) sampling, energy-based models |

| Energy Conservation | Not inherent (must be derived) | Built-in through conservation laws | Central (Hamiltonian is energy) |

| General Coordinates | Possible, but often cumbersome | Natural fit | Natural fit |

| Time Reversibility | Yes | Yes | Yes, especially in symplectic formulations |

Hamilton's Equations: The Geometry of Phase Space  ¶

¶

The phase space is a mathematical space where we can represent the set of possible states of a physical system. For a system with \( n \) degrees of freedom, the phase space is a \( 2n \)-dimensional space, often visualized as a map where each point \( (q, p) \) represents a unique state. The evolution of the system is described by the motion of a point in this space, governed by Hamilton's equations.

This formulation offers several advantages. It makes it straightforward to identify conserved quantities and symmetries through canonical transformations and Poisson brackets, which provides deeper insights into the system's behavior. For instance, Liouville's theorem states that the volume in phase space occupied by an ensemble of systems remains constant over time, expressed as:

or equivalently:

where \( \rho(q, p, t) \) is the density function. This helps us to represent the phase space flows and how they preserve area under symplectic transformations. Its relation to symplectic geometry enables mathematical properties that are directly relevant to many numerical methods. For instance, it enables Hamiltonian Monte Carlo to perform well in high-dimensions by defining MCMC strategies that increases the chances of accepting a sample (particle).

Symplecticity: The Sacred Invariant¶

Hamiltonian flows preserve the symplectic 2-form \( \omega = \sum_i dq_i \wedge dp_i \).

Symplectic 2-form \( \omega \)

The symplectic 2-form, denoted by \( \omega = \sum_i dq_i \wedge dp_i \), is a mathematical object used in symplectic geometry. It measures the area of parallelograms formed by vectors in the tangent space of a phase space.

- \( dq_i \) and \( dp_i \): Infinitesimal changes in position and momentum coordinates.

- \( \wedge \): The wedge product, which combines differential forms in an antisymmetric way meaning that \( dq_i \wedge dp_i = -dp_i \wedge dq_i \).

- \( \sum_i \): Sum over all degrees of freedom.

Imagine a phase space where each point represents a state of a physical system. The symplectic form assigns a value to each pair of vectors, effectively measuring the area of the parallelogram they span. This area is preserved under Hamiltonian flows.

Key Properties

- Closed: \( d\omega = 0 \) which means its exterior derivative is zero \( d\omega=0 \). This property ensures that the form does not change under continuous transformations.

- Non-degenerate: The form is non-degenerate if \( d\omega(X,Y)=0 \) for all \( Y \)s, then \( X=0 \). This ensures that every vector has a unique "partner" vector such that their pairing under \( \omega \) is non-zero.

Example

For a simple harmonic oscillator with one degree of freedom, \( \omega = dq \wedge dp \). This measures the area of parallelograms in the phase space spanned by vectors representing changes in position and momentum.

A Very Simplistic PyTorch Code: While PyTorch doesn't directly handle differential forms, you can conceptually represent the symplectic form using tensors:

This code illustrates the antisymmetric nature of the wedge product.

Numerically, this means good integrators must respect:

Breaking Down the Formula

- Geometric numerical integration solves differential equations while preserving geometric properties of the system.

-

Symplecticity is a geometric property inherent to Hamiltonian systems. It ensures that the area of geometric structures (e.g., parallelograms) in phase space \( (q, p) \) remains constant over time. This is encoded in the symplectic form \( \omega = \sum_i dq_i \wedge dp_i \).

-

A numerical method is symplectic if it preserves \( \omega \). The Jacobian matrix of the transformation from \( (q(t), p(t)) \) to \( (q(t + \epsilon), p(t + \epsilon)) \) must satisfy the condition above.

-

The Jacobian matrix \( \frac{\partial (q(t + \epsilon), p(t + \epsilon))}{\partial (q(t), p(t))} \) quantifies how small changes in the initial state \( (q(t), p(t)) \) propagate to the next state \( (q(t + \epsilon), p(t + \epsilon)) \).

-

\( q(t) \) and \( p(t) \) : Position and momentum at time \( t \).

- \( q(t + \epsilon) \) and \( p(t + \epsilon) \) : Updated position and momentum after one time step \( \epsilon \).

- \( \frac{\partial}{\partial (q(t), p(t))} \) : Partial derivatives with respect to the initial state.

How are We Going to Solve it?¶

Numerical solvers for differential equations inevitably introduce errors that affect solution accuracy. These errors manifest as deviations from the true trajectory in phase space, particularly noticeable in energy-conserving systems like the harmonic oscillator. The errors fall into two main categories: local truncation error, arising from the approximation of continuous derivatives with discrete steps (proportional to \( \mathcal{O}(\epsilon^n+1) \) where \( \epsilon \) is the step size and n depends on the method); and global accumulation error, which compounds over integration time.

Forward Euler Method Fails at This!

Key Issue: Energy Drift from Non-Symplectic Updates¶

The forward Euler method (FEM) violates the geometric structure of Hamiltonian systems, leading to energy drift in long-term simulations. Let’s dissect why.

Forward Euler in Hamiltonian Systems¶

For a Hamiltonian \( H(q, p) \), the forward Euler updates position and momentum as: \( q(t + \epsilon) = q(t) + \epsilon \frac{\partial H}{\partial p}(q(t), p(t)),\quad p(t + \epsilon) = p(t) - \epsilon \frac{\partial H}{\partial q}(q(t), p(t)) \)

Unlike Leapfrog Verlet, these updates do not split the position/momentum dependencies, breaking symplecticity.

Step-by-Step Failure: Harmonic Oscillator Example¶

Hamiltonian: \( H = \frac{1}{2}(q^2 + p^2) \quad \text{(mass-spring system with m = k = 1 )} \)

Forward Euler Updates: \( q(t + \epsilon) = q(t) + \epsilon p(t) \quad \text{(position update)} \quad p(t + \epsilon) = p(t) - \epsilon q(t) \quad \text{(momentum update)} \)

Jacobian Matrix Analysis¶

The Jacobian \( M = \frac{\partial (q(t + \epsilon), p(t + \epsilon))}{\partial (q(t), p(t))} \) becomes: \( M = \begin{pmatrix} 1 & \epsilon \\ -\epsilon & 1 \end{pmatrix} \)

Symplectic Condition Check:

Does \( M^T J M = J \), where \( J = \begin{pmatrix} 0 & 1 \\ -1 & 0 \end{pmatrix} \)?

-

Transpose \( M^T \): \( M^T = \begin{pmatrix} 1 & -\epsilon \\ \epsilon & 1 \end{pmatrix} \)

-

Compute \( J M \): \( J M = \begin{pmatrix} -\epsilon & 1 \\ -1 & -\epsilon \end{pmatrix} \)

-

Final Product \( M^T J M \): \( M^T J M = \begin{pmatrix} 0 & 1 + \epsilon^2 \\ -1 - \epsilon^2 & 0 \end{pmatrix} \neq J \)

The result violates \( M^T J M = J \), proving symplecticity fails unless \( \epsilon = 0 \).

Practical Consequences: Energy Drift¶

Why This Matters

- Energy Drift: FEM artificially injects/dissipates energy over time because \( H(q,p) \) is not conserved.

- Phase Space Distortion: Volume preservation fails, corrupting long-term trajectories.

- Unusable for HMC: Sampling in Hamiltonian Monte Carlo relies on symplectic integrators to maintain detailed balance.

Example: Simulating a harmonic oscillator with FEM shows spiraling/non-closing orbits in phase space, unlike the stable ellipses from Leapfrog Verlet.

To overcome this, we turn to symplectic integrators—methods that respect the underlying geometry of Hamiltonian systems, leading us naturally to the Leapfrog Verlet method, a powerful symplectic alternative. 🚀

Symplectic Numerical Integrators  ¶

¶

Leapfrog Verlet¶

For a separable Hamiltonian \( H(q,p) = K(p) + U(q) \), where the corresponding probability distribution is given by:

the Leapfrog Verlet integrator proceeds as follows:

This Störmer-Verlet scheme preserves symplecticity exactly, with local error \( \mathcal{O}(\epsilon^3) \) and global error \( \mathcal{O}(\epsilon^2) \). You can read more about numerical methods and analysis in Python here.

How Exactly?

-

Leapfrog Verlet Update Equations

For a separable Hamiltonian \( H(q, p) = K(p) + U(q) \), the method splits into three component-wise steps:-

Half-step momentum update: \( p_{i}\left(t + \frac{\epsilon}{2}\right) = p_{i}(t) - \frac{\epsilon}{2} \frac{\partial U}{\partial q_{i}}(q(t)) \)

-

Full-step position update: \( q_{i}(t + \epsilon) = q_{i}(t) + \epsilon \frac{\partial K}{\partial p_{i}}\left(p\left(t + \frac{\epsilon}{2}\right)\right) \)

-

Full-step momentum update: \( p_{i}(t + \epsilon) = p_{i}\left(t + \frac{\epsilon}{2}\right) - \frac{\epsilon}{2} \frac{\partial U}{\partial q_{i}}(q(t + \epsilon)) \)

-

-

Jacobian Matrix Calculation

For the harmonic oscillator \( H(q, p) = \frac{1}{2}(q^2 + p^2) \), the updates simplify to:\[ q(t + \epsilon) = q(t) + \epsilon p(t) - \frac{\epsilon^2}{2} q(t), \quad p(t + \epsilon) = p(t) - \epsilon q(t) - \frac{\epsilon^2}{2} p(t). \]The Jacobian matrix \( M = \frac{\partial (q(t + \epsilon), p(t + \epsilon))}{\partial (q(t), p(t))} \) becomes: \( M = \begin{pmatrix} 1 - \frac{\epsilon^2}{2} & \epsilon \\ -\epsilon & 1 - \frac{\epsilon^2}{2} \end{pmatrix}. \)

-

Transpose of \( M \)

The transpose \( M^T \) swaps off-diagonal terms: \( M^T = \begin{pmatrix} 1 - \frac{\epsilon^2}{2} & -\epsilon \\ \epsilon & 1 - \frac{\epsilon^2}{2} \end{pmatrix}. \) -

Verify \( M^T J M = J \)

Let \( J = \begin{pmatrix} 0 & 1 \\ -1 & 0 \end{pmatrix} \). Compute \( M^T J M \):-

Calculate: \( J M = \begin{pmatrix} -\epsilon & 1 - \frac{\epsilon^2}{2} \\ -\left(1 - \frac{\epsilon^2}{2}\right) & -\epsilon \end{pmatrix}. \)

-

Calculate: \( M^T J M = \begin{pmatrix} 1 - \frac{\epsilon^2}{2} & -\epsilon \\ \epsilon & 1 - \frac{\epsilon^2}{2} \end{pmatrix} \begin{pmatrix} -\epsilon & 1 - \frac{\epsilon^2}{2} \\ -\left(1 - \frac{\epsilon^2}{2}\right) & -\epsilon \end{pmatrix}. \)

After matrix multiplication: \( M^T J M = \begin{pmatrix} 0 & 1 \\ -1 & 0 \end{pmatrix} = J. \)

-

The Leapfrog Verlet method satisfies \( M^T J M = J \), proving it preserves the symplectic structure. This matches its theoretical property as a symplectic integrator.

Why Symplectic Matters¶

They're the reversible neural nets of physics simulations!

Symplectic integrators like Leapfrog Verlet are critical for long-term stability in Hamiltonian systems.

- Phase space preservation: The volume in \( (q, p) \)-space is conserved exactly, avoiding artificial energy drift.

- Approximate energy conservation: While energy \( H(q,p) \) is not perfectly conserved (due to \( \mathcal{O}(\epsilon^2) \) error), it oscillates near the true value over exponentially long timescales.

- Practical relevance: This makes symplectic integrators indispensable in molecular dynamics and Hamiltonian Monte Carlo (HMC), where accurate sampling relies on stable trajectories.

Euler's method (first-order) systematically injects energy into the system, causing the characteristic outward spiral seen in the plots. Modified Euler's method (second-order) significantly reduces this energy drift. Most importantly, symplectic integrators like the Leapfrog method preserve the geometric structure of Hamiltonian systems even with relatively large step sizes by maintaining phase space volume conservation. This structural preservation is why Leapfrog remains the preferred method for long-time simulations in molecular dynamics and astronomy, where energy conservation is critical despite the visible polygon-like discretization artifacts at large step sizes.

Non-symplectic methods (e.g., Euler-Maruyama) often fail catastrophically in these settings.

| Integrator | Symplecticity | Order | Type | Local Error | Global Error | Suitable For | Computational Cost |

|---|---|---|---|---|---|---|---|

| Euler Method |  | 1 | Explicit | O(ε²) | O(ε) | Quick prototypes and Short-term simulations of general ODEs | Low |

| Symplectically Euler |  | 1 | Explicit | O(ε²) | O(ε) | Simple Hamiltonian systems | Low |

| Leapfrog (Verlet) |  | 2 | Explicit | O(ε³) | O(ε²) | Molecular dynamics, Long-term simulations of Hamiltonian systems | Moderate |

| Runge-Kutta 4 |  | 4 | Explicit | O(ε⁵) | O(ε⁴) | Short-term accuracy, General ODEs, but not recommended for long-term Hamiltonian systems | High |

| Forest-Ruth Integrator |  | 4 | Explicit | O(ε⁵) | O(ε⁴) | High-accuracy long-term simulations | High |

| Yoshida 6th-order |  | 6 | Explicit | O(ε⁷) | O(ε⁶) | High-accuracy | High |

| Heun’s Method (RK2) |  | 2 | Explicit | O(ε³) | O(ε²) | General ODEs requiring moderate accuracy | Moderate |

| Third-order Runge-Kutta |  | 3 | Explicit | O(ε⁴) | O(ε³) | When higher accuracy than RK2 is needed without the cost of RK4 | High |

| Implicit Midpoint Rule |  | 2 | Implicit (solving equations) | O(ε³) | O(ε²) | Hamiltonian systems, stiff problems | High |

| Fourth-order Adams-Bashforth |  | 4 | Multi-step (explicit) | O(ε⁵) | O(ε⁴) | Non-stiff problems with smooth solutions, after initial steps | Low |

| Backward Euler Method |  | 1 | Implicit (solving equations) | O(ε²) | O(ε) | Stiff problems, where stability is crucial | High |

Hamiltonian Monte Carlo¶

Hamiltonian Monte Carlo (HMC) is a Markov chain Monte Carlo (MCMC) method that leverages Hamiltonian dynamics to efficiently sample from complex probability distributions, particularly in Bayesian statistics and machine learning.

From Phase Space to Probability Space¶

HMC interprets target distribution \( P(z) \) as a Boltzmann distribution:

Substituting into this formulation, the Hamiltonian gives us a joint density:

where \( p(q|D) \) is the likelihood of the given data \( D \) and T=1 and therefore removed. We estimate our posterior distribution using the potential energy \( U(q) \) since \( P(q,p) \) consists of two independent probability distributions.

Augment with artificial momentum \( p \sim \mathcal{N}(0,M) \), then simulate Hamiltonian dynamics to propose new \( q' \) based on the distribution of the position variables \( U(q) \) which acts as the "potential energy" of the target distribution \( P(q) \), thereby creating valleys at high-probability regions.

For more on HMC, check out this explanation or this tutorial.

- Physical Systems: \( H(q,p) = U(q) + K(p) \) represents total energy

- Sampling Systems: \( H(q,p) = -\log P(q) + \frac{1}{2}p^T M^{-1} p \) defines exploration dynamics

The kinetic energy with the popular form of \( K(p) = \frac{1}{2}p^T M^{-1} p \), often Gaussian, injects momentum to traverse these landscapes. Crucially, the mass matrix \( M \) plays the role of a preconditioner - diagonal \( M \) adapts to parameter scales, while dense \( M \) can align with correlation structure. \( M \) is symmetric, positive definite and typically diagonal.

What is Positive Definite?

Positive Definite: For any non-zero vector \( x \), the expression \( x^T M x \) is always positive. This ensures stability and efficiency.

a) Positive Definite Form: A bowl-shaped surface where all eigenvalues are positive, indicating a minimum.

b) Negative Definite Form: An inverted bowl where all eigenvalues are negative, indicating a maximum.

c) Indefinite Form: A saddle-shaped surface with both positive and negative eigenvalues, indicating neither a maximum nor a minimum.

Each subplot includes the matrix \( M \) and the corresponding quadratic form \( Q(x) = x^T M x \).

Kinetic Energy Choices

- Gaussian (Standard HMC): \( K(p) = \frac{1}{2}p^T M^{-1} p \)

Yields Euclidean trajectories, efficient for moderate dimensions. - Relativistic (Riemannian HMC): \( K(p) = \sqrt{p^T M^{-1} p + c^2} \)

Limits maximum velocity, preventing divergences in ill-conditioned spaces. - Adaptive (Surrogate Gradients): Learn \( K(p) \) via neural networks to match target geometry.

Key Intuition

The Hamiltonian \( H(q,p) = U(q) + \frac{1}{2}p^T M^{-1} p \) creates an energy landscape where momentum carries the sampler through high-probability regions, avoiding random walk behavior.

The HMC Algorithm¶

The algorithm involves:

-

Initialization: Start with an initial position \( q_0 \) and sample momentum \( p_0 \sim \mathcal{N}(0,M) \).

-

Leapfrog Integration: Use the leapfrog method to approximate Hamiltonian dynamics. For a step size \( \epsilon \) and L steps, update:

-

Half-step momentum: \( p(t + \frac{\epsilon}{2}) = p(t) - \frac{\epsilon}{2} \frac{\partial U}{\partial q}(q(t)) \)

- Full-step position: \( q(t + \epsilon) = q(t) + \epsilon \frac{\partial K}{\partial p}(p(t + \frac{\epsilon}{2})) \), where \( K(p) = \frac{1}{2} p^T M^{-1} p \), so \( \frac{\partial K}{\partial p} = M^{-1} p \)

- Full-step momentum: \( p(t + \epsilon) = p(t + \frac{\epsilon}{2}) - \frac{\epsilon}{2} \frac{\partial U}{\partial q}(q(t + \epsilon)) \)

This is repeated L times to get proposed \( \dot{q} \) and \( \dot{p} \).

- Metropolis-Hastings Acceptance: Accept the proposed \( \dot{q} \) with probability \( \min(1, e^{H(q_0,p_0) - H(\dot{q},\dot{p})}) \), where \( H(q,p) = U(q) + K(p) \).

This process generates a Markov chain with stationary distribution \( P(q) \), leveraging Hamiltonian dynamics to take larger, more efficient steps compared to random-walk methods.

Why Better Than Random Walk?

HMC navigates high-dimensional spaces along energy contours - like following mountain paths instead of wandering randomly!

Recap of the Hamilton's equations?

This coupled system drives \( (q,p) \) along iso-probability contours of \( P(q) \), with momentum rotating rather than resetting at each step like in Random Walk Metropolis--think of following mountain paths instead of wandering randomly! The key parameters - integration time \( \tau = L\epsilon \) and step size \( \epsilon \) - balance exploration vs. computational cost:

- Short \( \tau \): Local exploration, higher acceptance

- Long \( \tau \): Global moves, risk of U-turns (periodic orbits)

Key Parameters and Tuning

Tuning \( M \) to match the covariance of \( P(q) \) (e.g., via warmup adaptation) and setting \( \tau \sim \mathcal{O}(1/\lambda_{\text{max}}) \), where \( \lambda_{\text{max}} \) is the largest eigenvalue of \( \nabla^2 U \), often yields optimal mixing.

Connection with Energy-Based Models¶

Energy-based models (EBMs) are a class of generative models that define a probability distribution over data points using an energy function. The probability of a data point is proportional to \( e^{-E(x)} \), where \( E(x) \) is the energy function. This formulation is directly analogous to the Boltzmann distribution in statistical physics, where the probability is related to the energy of a state. In Hamiltonian mechanics, the Hamiltonian function \( H(q, p) \) represents the total energy of the system, and the probability distribution in phase space is given by \( e^{-H(q,p)/T} \), where \( T \) is the temperature.

In EBMs, Hamiltonian Monte Carlo (HMC) is often used to sample from the model's distribution. HMC leverages Hamiltonian dynamics to propose new states, which are then accepted or rejected based on the Metropolis-Hastings criterion. This method is particularly effective for high-dimensional problems, as it reduces the correlation between samples and allows for more efficient exploration of the state space. For instance, in image generation tasks, HMC can sample from the distribution defined by the energy function, facilitating the generation of high-quality images.

EBMs define probability through Hamiltonians:

Potential Research Directions¶

Potential Research Directions¶

Symplecticity in Machine Learning Models¶

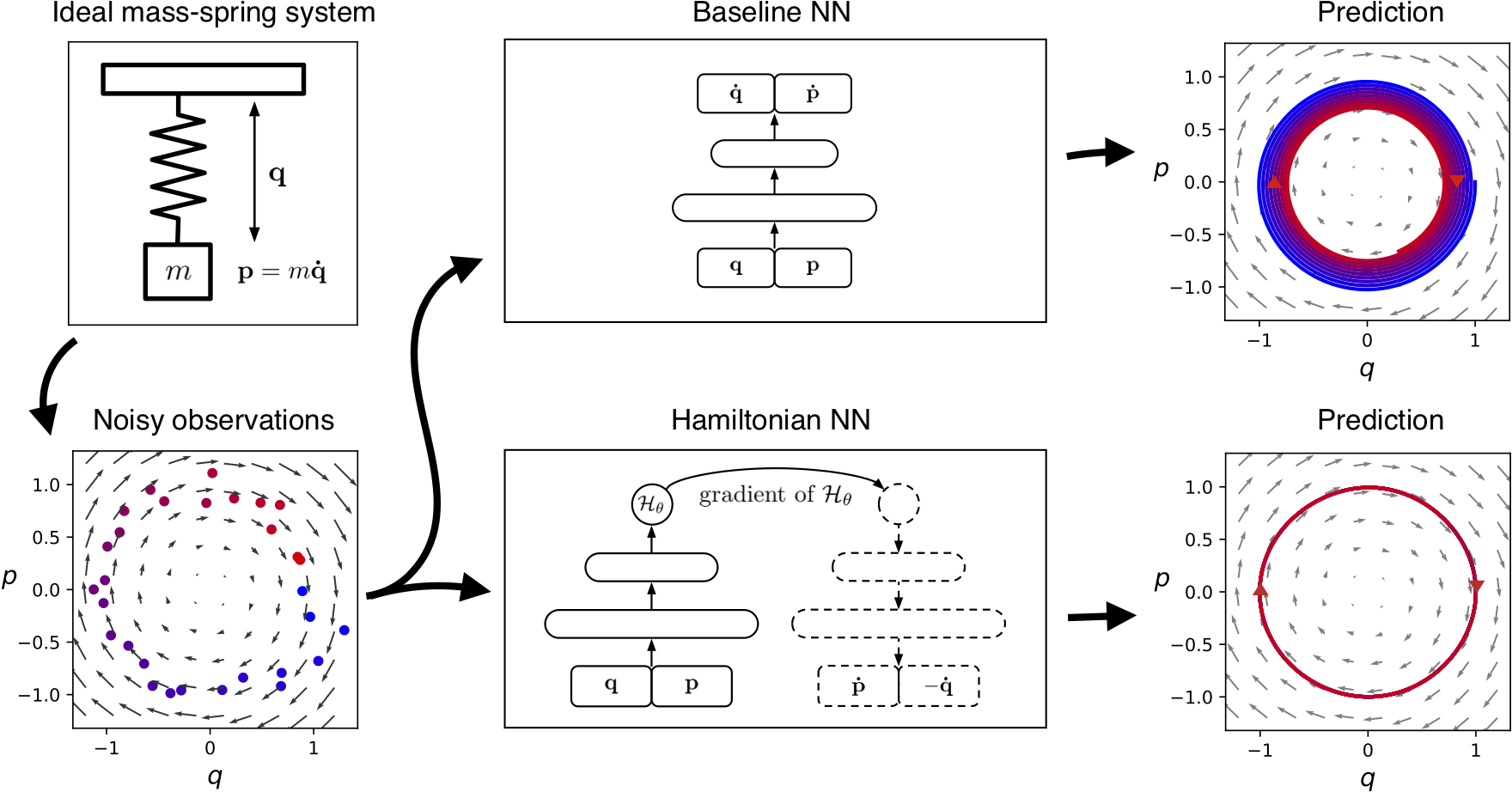

Incorporate the symplectic structure of Hamiltonian mechanics into machine learning models to preserve properties like energy conservation, which is crucial for long-term predictions. Generalizing Hamiltonian Neural Networks (HNNs), as discussed in Hamiltonian Neural Networks, to more complex systems or developing new architectures that preserve symplecticity

HMC for Complex Distributions:¶

HMC for sampling from complex, high-dimensional, and multimodal distributions, such as those encountered in deep learning. Combining HMC with other techniques, like parallel tempering, could handle distributions with multiple modes more effectively.

Combining Hamiltonian Mechanics with Other ML Techniques:¶

Integrate Hamiltonian mechanics with reinforcement learning to guide exploration in continuous state and action spaces. Using it to model the environment could improve exploration strategies, as seen in potential applications in robotics. Additionally, using Hamiltonian mechanics to define approximate posteriors in variational inference could lead to more flexible and accurate approximations.

Hamiltonian GANs¶

Employing Hamiltonian formalism as an inductive bias for the generation of physically plausible videos with neural networks. Imagine generator-discriminator dynamics governed by:

The possibilities make phase space feel infinite...

Want to Team Up on This 🤓¶

If you are an ML researcher and are interested in collaborating on researching EBMs, diffusion- and flow-based models, or have other relevant ideas in mind for generalization over out-of-distribution data (downstream tasks can be anything in from molecular design to robotics motion planning to LLMs), please feel free to reach out!

Also follow me on Twitter / BlueSky or GitHub—I’m usually rambling about this stuff there. Also on LinkedIn and Medium / TDS if you’re curious. To find more about my research interests, check out my personal website.

Useful Links¶

Useful Links¶

- An Introduction to Multistep Methods: Leap-frog

- The beginners guide to Hamiltonian Monte Carlo

- Hamiltonian Monte Carlo

- Hamiltonian Monte Carlo - Stan - Stan explained

- Hamiltonian Mechanics For Dummies: An Intuitive Introduction.

- Hamiltonian mechanics Wikipedia page

- An introduction to Lagrangian and Hamiltonian mechanics Lecture notes

-

Hamiltonian Mechanics - Jeremy Tatum, University of Victoria

- Hamiltonian Neural Networks

- Other: Natural Intelligence - A blog by Sam Greydanus - Many interesting topics